IA no Desenvolvimento de Jogos: Geração Procedural e NPCs Inteligentes

Explore o uso de inteligência artificial no desenvolvimento de jogos modernos. Aprenda sobre geração procedural, NPCs com machine learning, e ferramentas de IA para acelerar produção.

Mapa da Quest

IA no Desenvolvimento de Jogos: Geração Procedural e NPCs Inteligentes

A inteligência artificial está revolucionando o desenvolvimento de jogos. Não falamos apenas de NPCs que desviam de paredes—falamos de mundos infinitos gerados proceduralmente, personagens que aprendem com jogadores, diálogos criados dinamicamente e ferramentas que aceleram produção 10x. Com GPT-4, Stable Diffusion e redes neurais acessíveis, indies agora criam experiências antes exclusivas de estúdios com milhões em P&D. Este guia explora aplicações práticas de IA, desde algoritmos clássicos até cutting-edge machine learning.

O Estado da IA em Games 2025

Revolução em Andamento

A IA em jogos evoluiu de scripts simples para sistemas complexos que rivalizam inteligência humana em contextos específicos:

Marcos Recentes:

- OpenAI Five domina Dota 2 pros (99.4% win rate)

- NPCs em Cyberpunk usam GPT para diálogos únicos

- No Man's Sky: 18 quintilhões de planetas únicos

- AI Dungeon: narrativas infinitas geradas

- NVIDIA ACE: NPCs com conversação natural

Adoção no Mercado:

- 67% dos AAA games usam algum ML

- 45% dos indies experimentam com AI tools

- $2.8B investidos em gaming AI (2024)

- 200+ startups focadas em game AI

- 85% dos devs planejam adotar AI até 2026

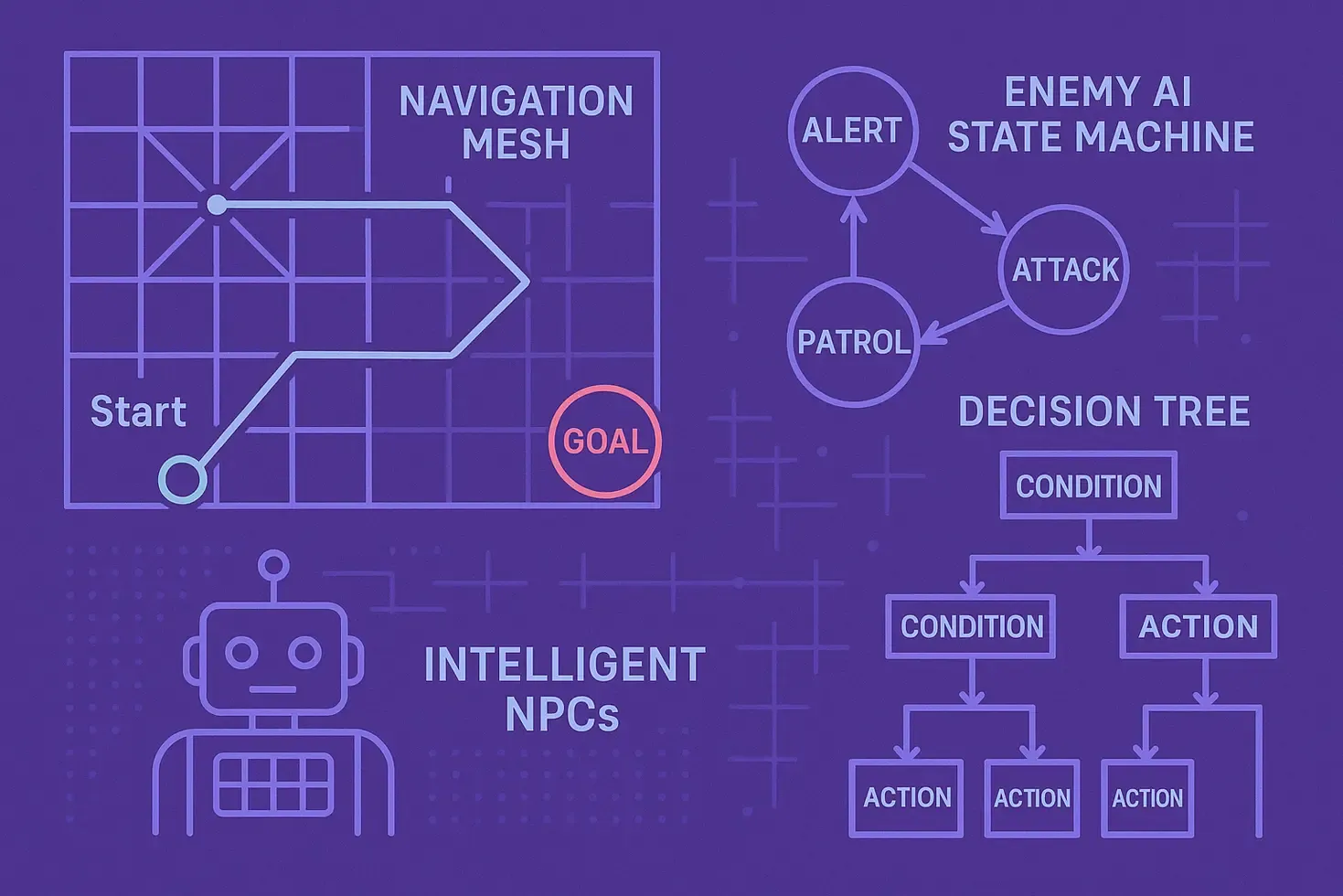

Categorias de IA em Gaming

Traditional Game AI (Determinística):

- Pathfinding (A*, Dijkstra)

- Decision trees

- State machines

- Behavior trees

- Rule-based systems

Procedural Generation (Algorítmica):

- Terrain generation

- Dungeon creation

- Item/weapon generation

- Quest generation

- Music composition

Machine Learning (Adaptativa):

- Neural networks para NPCs

- Reinforcement learning

- Player modeling

- Content generation via GAN

- Natural language processing

Hybrid Systems (Futuro):

- ML + traditional para robustez

- Procedural + curated content

- AI-assisted development

- Real-time adaptation

Geração Procedural: Mundos Infinitos

Fundamentos Matemáticos

Noise Functions - Base de Tudo:

// Perlin Noise para terreno natural

public class TerrainGenerator {

private float[,] GenerateHeightMap(int width, int height, float scale) {

float[,] heightMap = new float[width, height];

for (int x = 0; x < width; x++) {

for (int y = 0; y < height; y++) {

float xCoord = (float)x / width * scale;

float yCoord = (float)y / height * scale;

// Múltiplas oitavas para detail

float height = 0;

float amplitude = 1;

float frequency = 1;

for (int i = 0; i < 6; i++) {

height += Mathf.PerlinNoise(xCoord * frequency, yCoord * frequency) * amplitude;

amplitude *= 0.5f;

frequency *= 2;

}

heightMap[x, y] = height;

}

}

return heightMap;

}

}

Biome Distribution:

public enum Biome { Desert, Forest, Tundra, Ocean, Mountain }

public Biome GetBiome(float height, float temperature, float humidity) {

if (height < 0.3f) return Biome.Ocean;

if (height > 0.8f) return Biome.Mountain;

if (temperature < 0.3f) {

return humidity < 0.5f ? Biome.Tundra : Biome.Forest;

} else if (temperature > 0.7f) {

return humidity < 0.3f ? Biome.Desert : Biome.Forest;

}

return Biome.Forest; // Default

}

Dungeon Generation Avançada

Wave Function Collapse Algorithm:

public class WFCGenerator {

private class Tile {

public string type;

public List<string> validNeighbors;

public float weight;

}

private Tile[,] grid;

private List<Tile> tileset;

public void Generate(int width, int height) {

grid = new Tile[width, height];

// Initialize with all possibilities

for (int x = 0; x < width; x++) {

for (int y = 0; y < height; y++) {

grid[x, y] = null; // Superposition

}

}

// Collapse iteratively

while (HasUncollapsed()) {

Vector2Int pos = GetLowestEntropyPosition();

CollapseTile(pos);

Propagate(pos);

}

}

private void CollapseTile(Vector2Int pos) {

List<Tile> possibilities = GetValidTiles(pos);

grid[pos.x, pos.y] = WeightedRandom(possibilities);

}

private void Propagate(Vector2Int pos) {

Queue<Vector2Int> toCheck = new Queue<Vector2Int>();

toCheck.Enqueue(pos);

while (toCheck.Count > 0) {

Vector2Int current = toCheck.Dequeue();

// Update neighbors based on constraints

UpdateNeighborConstraints(current);

}

}

}

Regras para Dungeon Válida:

public class DungeonValidator {

public bool IsValidDungeon(Room[,] dungeon) {

// Todos quartos conectados?

if (!AllRoomsConnected(dungeon)) return false;

// Tem entrada e saída?

if (!HasEntryAndExit(dungeon)) return false;

// Distribuição de desafios balanceada?

if (!BalancedDifficulty(dungeon)) return false;

// Loot acessível?

if (!AllLootReachable(dungeon)) return false;

// Sem dead ends frustantes?

if (CountDeadEnds(dungeon) > MaxDeadEnds) return false;

return true;

}

}

Workshop de IA Procedural

Aprenda a criar mundos infinitos únicos. Hands-on com algoritmos e implementação prática.

Item e Weapon Generation

Sistema de Afixos Procedurais:

public class ItemGenerator {

private class Affix {

public string name;

public StatModifier modifier;

public float weight;

public int minLevel;

}

public Weapon GenerateWeapon(int itemLevel, Rarity rarity) {

Weapon weapon = new Weapon();

// Base stats

weapon.damage = Random.Range(itemLevel * 5, itemLevel * 8);

weapon.speed = Random.Range(0.8f, 1.5f);

// Número de afixos baseado em raridade

int affixCount = rarity switch {

Rarity.Common => 0,

Rarity.Rare => Random.Range(1, 3),

Rarity.Epic => Random.Range(3, 5),

Rarity.Legendary => Random.Range(5, 7),

_ => 0

};

// Apply affixes

List<Affix> applied = new List<Affix>();

for (int i = 0; i < affixCount; i++) {

Affix affix = GetRandomAffix(itemLevel, applied);

weapon.AddAffix(affix);

applied.Add(affix);

}

// Generate name

weapon.name = GenerateItemName(weapon.baseType, applied);

return weapon;

}

private string GenerateItemName(string baseType, List<Affix> affixes) {

if (affixes.Count == 0) return baseType;

// Prefix + Base + Suffix pattern

string prefix = affixes[0].name;

string suffix = affixes.Count > 1 ? $"of {affixes[1].name}" : "";

return $"{prefix} {baseType} {suffix}".Trim();

}

}

NPCs Inteligentes com Machine Learning

Behavior Trees Modernos

Arquitetura Híbrida (Traditional + ML):

public class SmartNPC : MonoBehaviour {

private BehaviorTree behaviorTree;

private NeuralNetwork decisionNetwork;

void Start() {

// Behavior tree para ações high-level

behaviorTree = new BehaviorTreeBuilder()

.Sequence()

.Condition(() => EnemyInSight())

.Selector()

.Condition(() => HealthLow())

.Action(() => Retreat())

.End()

.Action(() => AttackDecision()) // ML aqui

.End()

.Build();

// Neural network para decisões táticas

decisionNetwork = new NeuralNetwork(

inputSize: 15, // Estado do mundo

hiddenLayers: new int[] { 32, 16 },

outputSize: 5 // Ações possíveis

);

}

private ActionType AttackDecision() {

// Coletar inputs

float[] inputs = new float[] {

health / maxHealth,

enemy.health / enemy.maxHealth,

Vector3.Distance(transform.position, enemy.position),

ammoCount / maxAmmo,

// ... mais features

};

// Neural network decide

float[] outputs = decisionNetwork.FeedForward(inputs);

// Converter para ação

int action = GetMaxIndex(outputs);

return (ActionType)action;

}

}

Reinforcement Learning para Combat AI

Deep Q-Learning Implementation:

# Python para training (depois export para Unity)

import numpy as np

import tensorflow as tf

class CombatAI:

def __init__(self, state_size=20, action_size=8):

self.state_size = state_size

self.action_size = action_size

self.memory = []

self.epsilon = 1.0 # Exploration rate

self.model = self._build_model()

def _build_model(self):

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation='relu', input_shape=(self.state_size,)),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(32, activation='relu'),

tf.keras.layers.Dense(self.action_size, activation='linear')

])

model.compile(optimizer='adam', loss='mse')

return model

def act(self, state):

# Epsilon-greedy policy

if np.random.random() <= self.epsilon:

return np.random.randint(self.action_size)

q_values = self.model.predict(state.reshape(1, -1))

return np.argmax(q_values[0])

def train(self, batch_size=32):

if len(self.memory) < batch_size:

return

batch = random.sample(self.memory, batch_size)

for state, action, reward, next_state, done in batch:

target = reward

if not done:

target = reward + 0.95 * np.amax(self.model.predict(next_state.reshape(1, -1))[0])

target_f = self.model.predict(state.reshape(1, -1))

target_f[0][action] = target

self.model.fit(state.reshape(1, -1), target_f, epochs=1, verbose=0)

# Decay exploration

self.epsilon *= 0.995

self.epsilon = max(self.epsilon, 0.01)

Training Loop em Unity:

public class AITrainer : MonoBehaviour {

private CombatEnvironment env;

private List<Experience> replayBuffer = new List<Experience>();

IEnumerator TrainAgent() {

for (int episode = 0; episode < 10000; episode++) {

float totalReward = 0;

State state = env.Reset();

while (!env.IsEpisodeOver()) {

// Agent escolhe ação

int action = agent.SelectAction(state);

// Execute no ambiente

var (nextState, reward, done) = env.Step(action);

// Store experience

replayBuffer.Add(new Experience(state, action, reward, nextState, done));

// Train periodicamente

if (replayBuffer.Count > 1000 && episode % 10 == 0) {

TrainOnBatch(32);

}

state = nextState;

totalReward += reward;

yield return null; // Frame skip

}

Debug.Log($"Episode {episode}: Reward = {totalReward}");

}

}

}

Diálogos com GPT Integration

NPC Conversations Dinâmicas:

public class DialogueAI : MonoBehaviour {

private OpenAIAPI api;

private string npcPersonality;

private List<string> conversationHistory;

async Task<string> GenerateResponse(string playerInput) {

// Construir prompt com contexto

string prompt = $@"

You are {npcName}, a {npcRole} in a fantasy RPG.

Personality: {npcPersonality}

Current quest: {currentQuest}

Conversation history:

{string.Join("\n", conversationHistory.TakeLast(5))}

Player: {playerInput}

{npcName}:";

// Call GPT API

var response = await api.Completions.CreateCompletion(new CompletionRequest {

Model = "gpt-3.5-turbo",

Prompt = prompt,

MaxTokens = 100,

Temperature = 0.8f,

Stop = new[] { "\n", "Player:" }

});

string npcResponse = response.Choices[0].Text.Trim();

// Filtros de segurança e coerência

npcResponse = FilterInappropriateContent(npcResponse);

npcResponse = EnsureLoreConsistency(npcResponse);

// Update history

conversationHistory.Add($"Player: {playerInput}");

conversationHistory.Add($"{npcName}: {npcResponse}");

return npcResponse;

}

}

Mentoria IA para Games

Implemente IA avançada com guidance de experts. Do conceito à implementação production-ready.

Ferramentas de IA para Acelerar Produção

Art Generation com Stable Diffusion

Pipeline de Concept Art:

# Gerar concepts rapidamente

from diffusers import StableDiffusionPipeline

import torch

class ConceptArtGenerator:

def __init__(self):

self.pipe = StableDiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16

).to("cuda")

def generate_character_concepts(self, description, count=4):

prompt = f"""

Character concept art, {description},

digital painting, artstation, concept art,

sharp focus, illustration, highly detailed,

art by greg rutkowski and alphonse mucha

"""

negative_prompt = """

ugly, duplicate, morbid, mutilated,

poorly drawn hands, mutation, deformed

"""

images = []

for i in range(count):

image = self.pipe(

prompt,

negative_prompt=negative_prompt,

height=768,

width=512,

num_inference_steps=50,

guidance_scale=7.5,

seed=42 + i

).images[0]

images.append(image)

return images

Texture Generation Workflow:

def generate_pbr_textures(material_description):

# Gerar diffuse

diffuse = generate_texture(f"{material_description}, albedo map, seamless")

# Gerar normal via AI ou traditional

normal = generate_texture(f"{material_description}, normal map, blue purple")

# Roughness

roughness = generate_texture(f"{material_description}, roughness map, grayscale")

# Post-process para tileable

diffuse = make_seamless(diffuse)

normal = normalize_normal_map(normal)

return {

'diffuse': diffuse,

'normal': normal,

'roughness': roughness

}

Code Generation com Copilot

Accelerating Development:

// Comentário descritivo gera código

// Create a enemy spawn system that increases difficulty over time

public class EnemySpawner : MonoBehaviour {

[SerializeField] private GameObject[] enemyPrefabs;

[SerializeField] private Transform[] spawnPoints;

[SerializeField] private AnimationCurve difficultyCurve;

private float timeSinceStart = 0f;

private float spawnTimer = 0f;

void Update() {

timeSinceStart += Time.deltaTime;

spawnTimer += Time.deltaTime;

float currentDifficulty = difficultyCurve.Evaluate(timeSinceStart / 300f); // 5 min max

float spawnInterval = Mathf.Lerp(5f, 0.5f, currentDifficulty);

if (spawnTimer >= spawnInterval) {

SpawnEnemy(currentDifficulty);

spawnTimer = 0f;

}

}

void SpawnEnemy(float difficulty) {

// Copilot auto-completes complex logic

int enemyTier = Mathf.FloorToInt(difficulty * enemyPrefabs.Length);

enemyTier = Mathf.Clamp(enemyTier, 0, enemyPrefabs.Length - 1);

Transform spawnPoint = spawnPoints[Random.Range(0, spawnPoints.Length)];

GameObject enemy = Instantiate(enemyPrefabs[enemyTier], spawnPoint.position, Quaternion.identity);

// Scale enemy stats with difficulty

var stats = enemy.GetComponent<EnemyStats>();

stats.health *= (1f + difficulty * 0.5f);

stats.damage *= (1f + difficulty * 0.3f);

stats.speed *= (1f + difficulty * 0.2f);

}

}

Music e SFX Generation

Procedural Music com AI:

# Usando Magenta/TensorFlow

from magenta.models.music_vae import TrainedModel

import note_seq

class DynamicMusicGenerator:

def __init__(self):

self.model = TrainedModel(

checkpoint='cat-mel_2bar_big',

batch_size=4,

max_seq_length=32

)

def generate_combat_music(self, intensity=0.5):

# Generate base melody

melody = self.model.sample(

n=1,

temperature=intensity,

length=32

)

# Add layers based on intensity

if intensity > 0.3:

drums = self.generate_drums(intensity)

melody = self.combine_tracks(melody, drums)

if intensity > 0.6:

bass = self.generate_bass(intensity)

melody = self.combine_tracks(melody, bass)

# Convert to audio

audio = self.synthesize(melody)

return audio

def adapt_to_gameplay(self, game_state):

# Música reage ao gameplay

intensity = self.calculate_intensity(game_state)

return self.generate_combat_music(intensity)

Aplicações Práticas por Gênero

Roguelikes: Infinite Replayability

Dungeon + Enemy + Loot Generation:

public class RoguelikeGenerator {

public GameSession GenerateRun(int seed, int difficulty) {

Random.InitState(seed);

var session = new GameSession {

dungeon = GenerateDungeon(difficulty),

enemies = PopulateEnemies(difficulty),

loot = GenerateLootTable(difficulty),

bosses = SelectBosses(difficulty),

events = GenerateEvents(difficulty)

};

// Garantir balanceamento

session = BalanceSession(session);

return session;

}

private Dungeon GenerateDungeon(int difficulty) {

int roomCount = 10 + difficulty * 5;

var rooms = new Room[roomCount];

for (int i = 0; i < roomCount; i++) {

rooms[i] = new Room {

type = GetWeightedRoomType(i, roomCount),

size = Random.Range(3, 8),

exits = Random.Range(1, 5),

hazards = GenerateHazards(difficulty)

};

}

ConnectRooms(rooms);

return new Dungeon(rooms);

}

}

Strategy Games: Adaptive AI Opponents

AI que Aprende Estratégias do Jogador:

public class StrategicAI {

private PlayerModel playerModel;

private List<Strategy> availableStrategies;

public Strategy SelectCounterStrategy() {

// Analisar padrões do jogador

var playerTendencies = playerModel.AnalyzeTendencies();

// Escolher contra-estratégia

Strategy counter = null;

float maxEffectiveness = 0;

foreach (var strategy in availableStrategies) {

float effectiveness = CalculateEffectiveness(strategy, playerTendencies);

if (effectiveness > maxEffectiveness) {

maxEffectiveness = effectiveness;

counter = strategy;

}

}

// Adicionar variação para não ser previsível

if (Random.value < 0.2f) {

counter = MutateStrategy(counter);

}

return counter;

}

private void UpdatePlayerModel(PlayerAction action) {

playerModel.RecordAction(action);

// Re-treinar modelo periodicamente

if (playerModel.ActionCount % 100 == 0) {

playerModel.Retrain();

}

}

}

Sandbox Games: Emergent Behaviors

Ecosystem Simulation:

public class EcosystemAI : MonoBehaviour {

private List<Agent> creatures = new List<Agent>();

void SimulateEcosystem() {

foreach (var creature in creatures) {

// Neural network decide comportamento

var inputs = GatherEnvironmentData(creature);

var action = creature.brain.Decide(inputs);

ExecuteAction(creature, action);

// Evolução e aprendizado

if (creature.age % 100 == 0) {

EvaluateFitness(creature);

if (creature.fitness > reproductionThreshold) {

Reproduce(creature);

}

}

}

// Natural selection

creatures.RemoveAll(c => c.health <= 0);

// Mutações ocasionais

if (Random.value < mutationRate) {

MutateRandomCreature();

}

}

private Agent Reproduce(Agent parent) {

var child = Instantiate(parent);

child.brain = parent.brain.Copy();

child.brain.Mutate(0.1f); // 10% mutation

return child;

}

}

Otimização e Performance

AI Processing Budget

Frame Budget Distribution:

public class AIScheduler : MonoBehaviour {

private Queue<AITask> highPriority = new Queue<AITask>();

private Queue<AITask> normalPriority = new Queue<AITask>();

private Queue<AITask> lowPriority = new Queue<AITask>();

private float frameTimeBudget = 0.005f; // 5ms per frame for AI

void Update() {

float startTime = Time.realtimeSinceStartup;

// Process high priority always

while (highPriority.Count > 0) {

ProcessTask(highPriority.Dequeue());

if (Time.realtimeSinceStartup - startTime > frameTimeBudget * 0.5f)

break;

}

// Normal priority if time remains

while (normalPriority.Count > 0 &&

Time.realtimeSinceStartup - startTime < frameTimeBudget * 0.8f) {

ProcessTask(normalPriority.Dequeue());

}

// Low priority only if lots of time

while (lowPriority.Count > 0 &&

Time.realtimeSinceStartup - startTime < frameTimeBudget) {

ProcessTask(lowPriority.Dequeue());

}

}

}

LOD for AI

AI Complexity by Distance:

public class AILOD : MonoBehaviour {

public enum LODLevel { Full, Reduced, Minimal, Disabled }

private LODLevel GetAILOD(float distanceToPlayer) {

if (distanceToPlayer < 10f) return LODLevel.Full;

if (distanceToPlayer < 30f) return LODLevel.Reduced;

if (distanceToPlayer < 100f) return LODLevel.Minimal;

return LODLevel.Disabled;

}

void UpdateAI() {

float distance = Vector3.Distance(transform.position, player.position);

LODLevel lod = GetAILOD(distance);

switch (lod) {

case LODLevel.Full:

UpdateInterval = 0; // Every frame

UseComplexPathfinding = true;

UseMLDecisions = true;

break;

case LODLevel.Reduced:

UpdateInterval = 5; // Every 5 frames

UseComplexPathfinding = true;

UseMLDecisions = false; // Use simple heuristics

break;

case LODLevel.Minimal:

UpdateInterval = 30; // Every 30 frames

UseComplexPathfinding = false; // Simple steering

UseMLDecisions = false;

break;

case LODLevel.Disabled:

// No updates, just animate

break;

}

}

}

Ferramentas e Frameworks

Unity ML-Agents

Setup Básico:

using Unity.MLAgents;

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

public class SmartAgent : Agent {

public override void OnEpisodeBegin() {

// Reset environment

transform.position = startPosition;

health = maxHealth;

}

public override void CollectObservations(VectorSensor sensor) {

// Observe environment (input para neural network)

sensor.AddObservation(transform.position);

sensor.AddObservation(targetPosition);

sensor.AddObservation(health / maxHealth);

sensor.AddObservation(velocity);

}

public override void OnActionReceived(ActionBuffers actions) {

// Execute ações da neural network

float moveX = actions.ContinuousActions[0];

float moveZ = actions.ContinuousActions[1];

bool shouldAttack = actions.DiscreteActions[0] == 1;

Move(new Vector3(moveX, 0, moveZ));

if (shouldAttack) Attack();

}

public override void Heuristic(in ActionBuffers actionsOut) {

// Controle manual para testing

var continuousActions = actionsOut.ContinuousActions;

continuousActions[0] = Input.GetAxis("Horizontal");

continuousActions[1] = Input.GetAxis("Vertical");

var discreteActions = actionsOut.DiscreteActions;

discreteActions[0] = Input.GetButton("Fire1") ? 1 : 0;

}

}

TensorFlow Integration

Loading Pre-trained Models:

using TensorFlow;

public class TFModelRunner {

private TFSession session;

private TFGraph graph;

public void LoadModel(string modelPath) {

graph = new TFGraph();

graph.Import(File.ReadAllBytes(modelPath));

session = new TFSession(graph);

}

public float[] RunInference(float[] input) {

var runner = session.GetRunner();

runner.AddInput(graph["input"][0], input);

runner.Fetch(graph["output"][0]);

var output = runner.Run();

var result = output[0].GetValue() as float[,];

return result[0];

}

}

Casos de Estudo

No Man's Sky: Procedural Universe

Técnicas Usadas:

- Superformula para varied shapes

- Deterministic generation (seed-based)

- LOD system aggressive

- Creature generation via spline deformation

Lições:

- Procedural não substitui curated content

- Variedade visual > variedade mecânica

- Players notam patterns rapidamente

- Post-launch content crucial

Middle-earth: Shadow of Mordor - Nemesis System

Inovações:

- NPCs lembram interações

- Personalidades emergentes

- Hierarquia dinâmica

- Narrativas procedurais

Implementation Insights:

public class NemesisNPC {

public Dictionary<string, float> memories;

public List<Trait> personality;

public List<Relationship> relationships;

public void RecordInteraction(Player player, InteractionType type) {

memories[$"{player.id}_{type}"] = Time.time;

// Evolve personality based on interaction

if (type == InteractionType.Defeated) {

personality.Add(Trait.Vengeful);

powerLevel *= 1.2f;

}

}

}

Futuro da IA em Games

Tendências 2025-2030

Large Language Models Integration:

- NPCs com conversação ilimitada

- Quest generation dinâmica

- Narrative branching infinita

- Player intent understanding

Neural Rendering:

- Assets gerados em real-time

- Infinite texture variations

- Character customization ilimitada

- Style transfer live

Adaptive Difficulty:

- AI que aprende skill pessoal

- Balanceamento dinâmico perfeito

- Frustração/boredom elimination

- Personalized challenge curves

Emergent Gameplay:

- Sistemas que surpreendem developers

- Player-created mechanics

- Self-evolving games

- Community-driven evolution

Conclusão: IA Como Game Design Tool

Inteligência artificial não substitui creativity—amplifica ela. Com IA, um developer solo pode criar mundos que rivalizavam com equipes de centenas. NPCs ganham vida própria. Mundos se tornam infinitos. Experiências se tornam únicas para cada jogador.

O futuro é híbrido: Human creativity guiando AI capability. Procedural generation com curated quality. Machine learning com game design wisdom.

Comece pequeno: Implemente pathfinding melhor. Adicione variação procedural. Experimente com behavior trees. Cada sistema de IA aprendido expande possibilidades exponencialmente.

Indies agora competem com AAA não em recursos, mas em inovação. IA nivela o playing field. Sua criatividade é o diferencial.

Ferramentas estão disponíveis. Knowledge está acessível. Comunidade está pronta para ajudar.

O que você criará quando limitações técnicas desaparecerem?

O futuro dos jogos é inteligente. Faça parte dele.